No posting until after Labor Day. I'll be off the grid in the desert for a little over a week: no phone, no e-mail, no SMS, and no internet. Although I will be bringing a small Raspberry Pi-based server with me, so technically, there will be WiFi, but only on the local intranet that I'm setting up, with no uplink to the outside world. See you when I get back.

Saturday, August 24, 2013

Thursday, August 22, 2013

Theory Thursday, with bonus Metaphysics: Gödel's Incompleteness Theorem

Gödel's Incompleteness Theorem is one of those things that makes pop science writers go gaga. And, I'm not going to be contrarian: it's profound, it's meta, it's paradoxical, and it has a lot to say about what we can accomplish as human beings. But, at its core, it's really a programming problem, albeit one with very interesting implications*.

In the 1920s, in the shadow of The Great War, David Hilbert set out to do something monumental, something that would bring together mathematicians from all over the world, in a grand challenge: to formulate all of mathematics rigorously, and provably, starting from a single set of axioms. It came to be known as Hilbert's Program, and the name has an unintentional level of acuity: it really boils down to a system of encodable rules which one can use to generate mathematically true statements. Then, by utilizing these rules in a fully deterministic fashion, you can prove mathematical theorems. By iterating with those rules, you could theoretically enumerate all possible mathematically true statements: it's a programming language for generating All Mathematical Laws!

Except...for that "theoretically" bit. Turns out, it's not true. Kurt Gödel spent a lot of time trying to prove the completeness and self-consistency of such systems, but eventually wound up proving just the opposite: he demonstrated that you could construct the following types of statements in this mathematical language:

*However, while it's really cool, it's doesn't have something to say about everything: Carl Woese once asked me to write a paper on what the implications of the incompleteness theorem were for biological evolution, and Douglas Hofstadter happened to be giving a colloquium that week. I went down early before the colloquium and accosted him over coffee to ask him his thoughts on the matter. He considered it briefly, and then said, "I don't think there are any."

**The Mechanical Universe used to be shown on PBS in Chicago when I was growing up, and I found every single episode, from Newton's Laws to Relativity, to be absolutely mesmerizing. It had a strong impact on my decision to study physics.

In the 1920s, in the shadow of The Great War, David Hilbert set out to do something monumental, something that would bring together mathematicians from all over the world, in a grand challenge: to formulate all of mathematics rigorously, and provably, starting from a single set of axioms. It came to be known as Hilbert's Program, and the name has an unintentional level of acuity: it really boils down to a system of encodable rules which one can use to generate mathematically true statements. Then, by utilizing these rules in a fully deterministic fashion, you can prove mathematical theorems. By iterating with those rules, you could theoretically enumerate all possible mathematically true statements: it's a programming language for generating All Mathematical Laws!

Except...for that "theoretically" bit. Turns out, it's not true. Kurt Gödel spent a lot of time trying to prove the completeness and self-consistency of such systems, but eventually wound up proving just the opposite: he demonstrated that you could construct the following types of statements in this mathematical language:

- Statements about the provability of other statements (e.g., Statement X is unprovable)

- Statements that reference themselves (e.g., This sentence has N characters.)

- By combining the two, you can construct statements that reference their own provability, e.g.:

This theorem is unprovable.

This is similar in construction to the classic form of Russel's Paradox ("Does the barber who shaves everyone who doesn't shave themselves shave himself?"), but with a twist: In this case, if the statement is provable, it's a paradox. However, if it is actually unprovable, there's no paradox. Therefore, it is both unprovable but also true. Hence, Gödel showed that the system is incapable of proving a demonstrably true statement, and cannot be complete. As you might guess, there's a lot of overlap between this finding and computability theory, and it can even be expressed in terms of the latter, which brings it strongly into the realm of computer science.

But, one of the things that has always stuck out to me about the Incompleteness Theorem is what it has to say about what it is we can possibly know about the world, and what this means about our perception of reality. I was raised in a moderately religious household, and eventually, in the course of my own explorations, became very observant, went to Jewish summer camp, studied the Torah, and tried, to the best of my ability, to puzzle out what it was God wanted out of us. But, over the years, the relentlessly empirical life of the academic scientist took a toll on my faith, at first subtly, but like a river grinding out the Grand Canyon, its effect was profound over the 10 years I spent as a physicist. And, one day, I woke up and realized: there was no room in The Mechanical Universe for an omniscient, ominpotent being**. Plus, the psychological and sociological reasons for us to invent such a being were too clear to ignore. It was just too facile a solution to the difficult problem of What Do I Do With My Life.

But even so, I've never been able to call myself an atheist, because, in spite of all my book larnin', I know what I don't know. On the one hand, at the most granular scale, almost everything I've ever learned about the physical world is premised on an unprovable hypothesis: that the things that we observed yesterday are a good guide to what we will observe tomorrow. It's unprovable, because the only evidence that we have for the inductive principle is the inductive principle itself: it has worked well in the past, so it should continue to work. I have no problem using the inductive principle as a guide, as a way to decide which insurance to buy, or how to spot a mu meson. But in matters of spiritual life or death, can we really count on something so flimsy?

And then, on the grand, cosmic scale, we have the Incompleteness Theorem: I know that there are things out there that are true, but can't be systematically proven. In fact, Gödel proved that there's an infinite number of them. If that's true, I can prove and prove and prove until my hearts' content, but I can never be sure that I haven't failed to observe something which could change my entire understanding around. There's just too much truth out there, and not enough time.

So, what are we to make of the universe? Nothing is provable, and there's an infinity of unprovable true things that we'll never know. Given all that, can we really rule out, conclusively, the existence of something outside of this mortal coil? And so ends my catechism.

*However, while it's really cool, it's doesn't have something to say about everything: Carl Woese once asked me to write a paper on what the implications of the incompleteness theorem were for biological evolution, and Douglas Hofstadter happened to be giving a colloquium that week. I went down early before the colloquium and accosted him over coffee to ask him his thoughts on the matter. He considered it briefly, and then said, "I don't think there are any."

**The Mechanical Universe used to be shown on PBS in Chicago when I was growing up, and I found every single episode, from Newton's Laws to Relativity, to be absolutely mesmerizing. It had a strong impact on my decision to study physics.

Wednesday, August 21, 2013

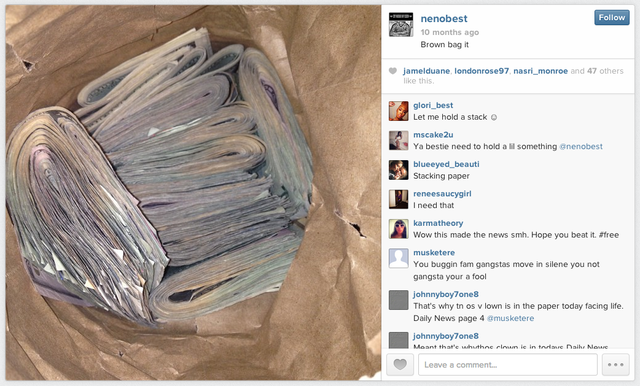

Is Earlybird or X-Pro II better for your wads of cash?

I was at a conference once on technology and law enforcement, and there was a gentleman presenting some sort of Twitter-monitoring technology on behalf of IBM. Because I was at the booth next to him, I got to hear his pitch dozens of times, describing a specific scenario in which a small time drug dealer was coordinating with clients over Twitter. As the day went on, the size of the fish he had caught got bigger and bigger, and the tone of his pitch more and more frenzied, until finally he jumped the shark and told someone that "nowadays all crime is coordinated over Twitter." Hah, Twitter is so 2012! In 2013, all crime is coordinated over Instagram:

Also in today's news: Supreme Court Justices don't use e-mail, which probably makes it difficult for them to opine on things like net neutrality, whether metadata qualifies for Fourth Amendment protections, and a host of other technologically relevant issue that are currently defining our society. Technological innovation is occurring at an ever faster rate, and has clearly passed the rate at which Supreme Court justices die, which means that this will be a permanent state of affairs. They may begin to understand e-mail around the time when they are asked to rule on the legality of remote-scanning your flying car's hyperdigicube transponder.

Aspiring rapper's Instagram photos lead to largest gun bust in New York City history

Monday, August 19, 2013

The Three P's of Big Data: Plumbing, Payment, and Politics

In a panel discussion at Georgetown Law School, I discussed some of the barriers to actually using data, at scale, in a large organization, to drive change. I lump these barriers into three categories, which I call The Three P's: Plumbing, Payment, and Politics. We're a long way from being able to just throw all the information into a computer, ask it a question, and get an answer out, and the things that stand between here and there are my raison d'etre. This weekend, The Sunday Times dipped its toe into Big Data commentary, with a contrarian take titled Is Big Data an Economic Big Dud?, in which the author asks, bluntly, What's taking so Goddamn long?

This is a worthy topic of discussion, but the article is a mish-mash, lumping things like streaming video, text messaging, Cisco, and analytics companies under the title "Big Data", and asking why this "Big Data" hasn't made an impact on the economy (while tacitly admitting that the economy has been in such flux since 2005 that asking what the impact of any particular development is amounts to asking why the wind direction didn't change when you passed gas.) In some sense, the very fact that the New York Times has covered it means that it may have already peaked, because once the Sunday Times becomes aware of a technological development, it's reached the level that geriatric crossword-puzzle enthusiasts might actually be dimly aware of it. Witness:

Essentially, we're still at the infancy of the Big Data movement, both in terms of its ease of use, and its cultural adoption. The potential to monetize how we use data for decision making is huge, but there are still significant obstacles to leveraging its potential.

[T]he economy is, at best, in the doldrums and has stayed there during the latest surge in Web traffic. The rate of productivity growth, whose steady rise from the 1970s well into the 2000s has been credited to earlier phases in the computer and Internet revolutions, has actually fallen. The overall economic trends are complex, but an argument could be made that the slowdown began around 2005 — just when Big Data began to make its appearance.

This is a worthy topic of discussion, but the article is a mish-mash, lumping things like streaming video, text messaging, Cisco, and analytics companies under the title "Big Data", and asking why this "Big Data" hasn't made an impact on the economy (while tacitly admitting that the economy has been in such flux since 2005 that asking what the impact of any particular development is amounts to asking why the wind direction didn't change when you passed gas.) In some sense, the very fact that the New York Times has covered it means that it may have already peaked, because once the Sunday Times becomes aware of a technological development, it's reached the level that geriatric crossword-puzzle enthusiasts might actually be dimly aware of it. Witness:

Other economists believe that Big Data’s economic punch is just a few years away, as engineers trained in data manipulation make their way through college and as data-driven start-ups begin hiring.

(Emphasis mine.) I don't know where the Times gets their information about high tech hiring, but judging from this sentence, it's probably from the classifieds page of the New York Times.

Paul Krugman, as usual, has a more nuanced and interesting set of questions:

OK, we've been here before; there was a lot of skepticism about the Internet too — and I was one of the skeptics. In fact, there was skepticism about information technology in general; Robert Solow quipped that “You can see the computer age everywhere but in the productivity statistics”. But here’s another instance where economic history proved very useful. Paul David famously pointed out (pdf) that much the same could have been said of electricity, for a long time; the big productivity payoffs to electrification didn't come until after around 1914.

Why the delays? In the case of electricity, it was all about realizing how to take advantage of the technology, which meant reorganizing work. A steam-age factory was a multistory building with narrow aisles; that was to minimize power loss when you were driving machines via belts attached to overhead shafts driven by a steam engine in the basement. The price of this arrangement was cramped working spaces and great difficulty in moving stuff around. Simply replacing the shafts and belts with electric motors didn’t do much; to get the big payoff you had to realize that having each machine powered by its own electric motor let you shift to a one-story, spread-out layout with wide aisles and easy materials handling.

I think it's reasonable to suggest that Big Data™ writ large may never (and probably won't) have the same impact on the world as electricity or The Intarwebz. But the question of what may be slowing its impact is something on which I am well qualified to comment. I will make more extensive posts about this in the coming weeks and months, but in terms of the three P's, the plumbing pieces are still in their infancy, and are hugely labor intensive: getting data from unstructured sources, like documents, images, and video is nearly impossible without significant cost and data-specific tuning. And, even making full use of structure data (databases, XML, etc) is painful because of the amount of context and integration required.

More importantly, though, the politics of data-driven decision making are subject to the politics of any paradigm-shifting change, particularly ones that have the impact to show that the Chief Executive Officer is wearing no clothes. Large organizations have been Doing It Wrong™ for so long now that convincing them to take the implications of their data seriously is an uphill battle against politics and entrenched interests. In theory, the market should correct and allow data-driven decisions to lead if they provide a competitive edge. But in practice, pop-culture management books and pop-science provide managers with confirmation bias that their gut instincts are all they need to run a giant organization, data be damned. And, even when a CEO wants to make a large scale change, he has to push it through using hired thugs just to get any traction.

More importantly, though, the politics of data-driven decision making are subject to the politics of any paradigm-shifting change, particularly ones that have the impact to show that the Chief Executive Officer is wearing no clothes. Large organizations have been Doing It Wrong™ for so long now that convincing them to take the implications of their data seriously is an uphill battle against politics and entrenched interests. In theory, the market should correct and allow data-driven decisions to lead if they provide a competitive edge. But in practice, pop-culture management books and pop-science provide managers with confirmation bias that their gut instincts are all they need to run a giant organization, data be damned. And, even when a CEO wants to make a large scale change, he has to push it through using hired thugs just to get any traction.

Essentially, we're still at the infancy of the Big Data movement, both in terms of its ease of use, and its cultural adoption. The potential to monetize how we use data for decision making is huge, but there are still significant obstacles to leveraging its potential.

Friday, August 16, 2013

Data Sharing Done Right

By my hackweek teammate Andy I: http://www.marketsforgood.org/beyond-alphabet-soup-5-guidelines-for-data-sharing

Read this if you ever plan to share data publicly.

Read this if you ever plan to share data publicly.

I Do Not Think This Word Means What You Think It Means

Poor Peter Shih. His rant about the things he hates about San Francisco (ugly girls, homeless people, and not being able to park his car) generated so much derision that he had to take it down, and clarify that it was "satire". This is a common defense of bigots and jerks everywhere. Here's the thing: satire is a tool used by the weak against the powerful. It's a way that someone with no power can even the odds, through wit and insight. Here, Shih is the powerful: he's YCombinator backed male with a car and a job making fun of women, the homeless, and bicyclists. If it were funny, he'd at least be able to claim he was poking fun at himself. But it's not funny. There isn't even a hint of self-deprecation. A vitriolic stream of misogyny and self-entitlement isn't called "satire", it's called being a jerk. And so ends my catechism.

Tuesday, August 13, 2013

Getting (Real) Things Done

I'm a technologist. I like technology. Specifically, I like bringing real change to real problems, using technology. I spent 10 years as an academic, engaged in, as my father liked to say, the examination of the frontiers of human knowledge using a magnifying glass. But, by far, the thing I loved more than anything else as an academic was to build things, both machines and software. Instead of relying on paper notebooks, I rolled my own web-based lab notebook, in a combination of object-oriented Perl and JavaScript (this was a decade ago, and to this day, my colleagues insist on endorsing me for Perl on LinkedIn, which I think is about as valuable as being endorsed for trepanning.) In the end, one of the reasons I left academia was precisely because I liked building things a lot more than I liked discovering things of marginal value, and one of the reasons I love my job is because the things I build have immense value.

I put all this forward as a form of credentialing, though, to give you my bona-fides as a techno-optimist. Because what I really want to say is: the vast majority of the problems in our world will not be solved by apps, hackathons, or data jams. They can only be solved by talking to people.

Let's be honest: most engineers and nerds hate talking to people. I met my wife through a dating website, because I am gripped with terror at the idea of introducing myself to a woman in a social setting. I book my restaurant reservations using Open Table because restaurant hosts at upscale restaurants are some of the most odious people to talk to on the planet. So, faced with a problem, we will opt to write software to solve it. Witness, for instance, this article about StopBeef.com, a “matchmaking app for murder mediators”, as PandoDaily puts it:

A high profile shooting on Mother’s Day that injured 19 people got hacker Travis Laurendine thinking about how homicide was impacting the city. The National Civic Day of Hacking was fast approaching, so he decided to try “hacking the murder rate” for the New Orleans version of the event.

Laurendine was friends with Nicholas through the rap community, and he turned to him for advice. “I was like: you know this inside and out, what do you think would help?” Laurendine said.

Nicholas told Laurendine about a recent mediation experience he’d had. Two guys were beefing and carried guns. The conflict had escalated over time, and it was starting to look like one would try to take the other out. But Nicholas knew both of them, and one of them asked him to help settle the issue.

An intervention was staged, and the two beefers showed up with their entourages to Nicholas’ restaurant. “They both really wanted to stop the beef,” Nicholas remembers. “I told them it didn’t make sense for them to die over nothing.” The entourages agreed, and with their friends (and a local celebrity) asking them to stop, the beefers were able to end the disagreement without looking like they had backed down.

When Laurendine asked Nicholas for hacker app ideas, Nicholas relayed this story and said, “You need to do something like that! You can hit this app, log in, tell them who you’re beefing with, and someone from the neighborhood can come spoil.”

…

After submitting the stopbeef app to the government, Laurendine was picked as one of the 15 top “Champions of Change” nationally in civic hacking. He’s headed to the White House to receive recognition tomorrow.

I don't mean this as a dig against StopBeef.com, which I'm sure has provided some really valuable outcomes, and may have even saved lives (although the linked article doesn't cite any specific success stories.) But, it's pretty clear that the key element here is people talking to people. And I have to wonder: if Laurendine et al had spent the time learning about the community, talking to people, doing volunteer work with local tutoring organizations or Boys and Girls clubs, or finding a way to actually engage people directly, would it have had a higher impact than the equivalent amount of work spent trying to get their CSS to look just-so?

Social problems are particularly prone to this kind of wishful thinking. When I first started looking for volunteer opportunities when I was in graduate school, I reasoned that there must be dozens of organizations looking for someone to build them a web site, or a database, or maintain their PCs, and that I could bring my highly valuable skill set to bear on these problems, to great plaudits. What I in fact, discovered, is that volunteer organizations need people to do things for other people, not for machines. And, the less interesting and high profile the work, the more they need it. Habitat for Humanity had so many church groups, schools, and companies calling on them to volunteer that the local chapter didn't even bother to call me back. The San Francisco Food Bank has a several week waiting list if you want to come in and help sort canned goods. Even the Martin De Porres soup kitchen in my neighborhood sometimes has too many people doing dishes when I come in to volunteer. In grad school, I wound up driving a van for a program called Mothers Too Soon. On Thursday nights, in the winter, I would pick up 15 – 17 year old African American girls from the outlying areas of Champaign-Urbana, and drive them and their young children to a local church. There, I would sit and read journal articles for a couple of hours while they had dinner and counseling, and then would drive them back home. It wasn't particularly fun, but that was the point: nobody else wanted to do it, which was why there was a need. The only skill I had that it utilized was a drivers license, and a willingness to help out.

But, techno-optimism bedevils the corporate world just as much. 99% of my job could be done from the comfort of my home office. I can video teleconference, Webex, call, SSH, and pretty much do all of my job functions without ever so much as standing up. But I hop on a southbound train from San Francisco to Palo Alto every morning at 7:19AM like clockwork, and I fly back and forth to DC and New York (as I am doing right now) several times a month. And I do it because, in spite of all the things I can do with just a computer and a wifi connection, nothing important ever gets accomplished unless I'm there in person. You can commit code to the git repo for years on end, but it's not until a person is actually using it that you've accomplished something.

For example. I have occasionally planned trips to client sites not because I needed to get something done there, but because being there, in person, forces the client to make sure I have something to do when I get there. So, I tell them that I'm coming in to configure the networking, and they need to have the servers racked by Wednesday. The truth is that, if they just plug in the Remote Access Console, I can configure the rest of it from Palo Alto, without the need to fly anywhere, or even put my pants on, for that matter. But if I did that, I could spend the next month or more calling, e-mailing, and sending carrier pigeons, for all the good it would do me; the servers would get racked when the IT team had nothing else to do, which is not a condition that has ever prevailed since the invention of the abacus (“Abacus help-desk hours are between 2AM and 4AM on the first new moon of the harvest season. If you require assistance outside of these hours, please consult our rice-paper scroll for self-help and Frequently Asked Questions.”) Is this a huge waste of jet fuel, money, and 3-ounce bottles of shaving cream? Yes. But it's less wasteful than flushing three months of a six month software pilot down the drain while I wait for the servers to get racked. And, while I'm there, I can stop by the analysts' desks and chat with them about their work, their workflow, and what kinds of actual, real-world problems keep them up at night. I can take the clients' project lead out to lunch and let them dish face-to-face about who's trying to block the roll-out, and what kinds of changes we might want to make to turn their opinion around. I can ask for an introduction to the head of a different department or someone who works at a different firm, and see if they're having the same problem, and whether we can try to solve it for them too. And I can hit up my college friends who live in New York and DC, toast heartily to our health, and hear about their latest project, whether or not it has any bearing on my work or not, just to expand my mind a bit.

Software and the internet has changed our world, and in most ways, I think it's been for the greater good. People like to throw digs at Facebook for its triviality, but I landed my current job because I connected with someone on Facebook who turned out to be friends with someone who I had met years before and not seen since. So, we reconnected, and started talking, and when my postdoc was over, he suggested I interview. Not only that, but in the ensuing years, he's become one of my best friends, and was the officiant at my wedding. I can confidently say that my life would be less rich and less happy without Facebook. But the good that Facebook, and JDate, and GMail did for me were that they brought me into contact with people who mattered. The internet has lowered the barriers to human interaction, but it is always the human interaction itself that makes the world a better place. Next time you want to write an app to solve a problem, ask yourself: could I do more by volunteering, or community organizing, or even just reading and learning about this problem, than I could by debugging jQuery? Or am I secretly serving my own interests by doing something I enjoy or learning a new marketable skill, or maybe just avoiding the fact that, for better or for worse, the problems in our world are really, really hard?

I put all this forward as a form of credentialing, though, to give you my bona-fides as a techno-optimist. Because what I really want to say is: the vast majority of the problems in our world will not be solved by apps, hackathons, or data jams. They can only be solved by talking to people.

Let's be honest: most engineers and nerds hate talking to people. I met my wife through a dating website, because I am gripped with terror at the idea of introducing myself to a woman in a social setting. I book my restaurant reservations using Open Table because restaurant hosts at upscale restaurants are some of the most odious people to talk to on the planet. So, faced with a problem, we will opt to write software to solve it. Witness, for instance, this article about StopBeef.com, a “matchmaking app for murder mediators”, as PandoDaily puts it:

A high profile shooting on Mother’s Day that injured 19 people got hacker Travis Laurendine thinking about how homicide was impacting the city. The National Civic Day of Hacking was fast approaching, so he decided to try “hacking the murder rate” for the New Orleans version of the event.

Laurendine was friends with Nicholas through the rap community, and he turned to him for advice. “I was like: you know this inside and out, what do you think would help?” Laurendine said.

Nicholas told Laurendine about a recent mediation experience he’d had. Two guys were beefing and carried guns. The conflict had escalated over time, and it was starting to look like one would try to take the other out. But Nicholas knew both of them, and one of them asked him to help settle the issue.

An intervention was staged, and the two beefers showed up with their entourages to Nicholas’ restaurant. “They both really wanted to stop the beef,” Nicholas remembers. “I told them it didn’t make sense for them to die over nothing.” The entourages agreed, and with their friends (and a local celebrity) asking them to stop, the beefers were able to end the disagreement without looking like they had backed down.

When Laurendine asked Nicholas for hacker app ideas, Nicholas relayed this story and said, “You need to do something like that! You can hit this app, log in, tell them who you’re beefing with, and someone from the neighborhood can come spoil.”

…

After submitting the stopbeef app to the government, Laurendine was picked as one of the 15 top “Champions of Change” nationally in civic hacking. He’s headed to the White House to receive recognition tomorrow.

I don't mean this as a dig against StopBeef.com, which I'm sure has provided some really valuable outcomes, and may have even saved lives (although the linked article doesn't cite any specific success stories.) But, it's pretty clear that the key element here is people talking to people. And I have to wonder: if Laurendine et al had spent the time learning about the community, talking to people, doing volunteer work with local tutoring organizations or Boys and Girls clubs, or finding a way to actually engage people directly, would it have had a higher impact than the equivalent amount of work spent trying to get their CSS to look just-so?

Social problems are particularly prone to this kind of wishful thinking. When I first started looking for volunteer opportunities when I was in graduate school, I reasoned that there must be dozens of organizations looking for someone to build them a web site, or a database, or maintain their PCs, and that I could bring my highly valuable skill set to bear on these problems, to great plaudits. What I in fact, discovered, is that volunteer organizations need people to do things for other people, not for machines. And, the less interesting and high profile the work, the more they need it. Habitat for Humanity had so many church groups, schools, and companies calling on them to volunteer that the local chapter didn't even bother to call me back. The San Francisco Food Bank has a several week waiting list if you want to come in and help sort canned goods. Even the Martin De Porres soup kitchen in my neighborhood sometimes has too many people doing dishes when I come in to volunteer. In grad school, I wound up driving a van for a program called Mothers Too Soon. On Thursday nights, in the winter, I would pick up 15 – 17 year old African American girls from the outlying areas of Champaign-Urbana, and drive them and their young children to a local church. There, I would sit and read journal articles for a couple of hours while they had dinner and counseling, and then would drive them back home. It wasn't particularly fun, but that was the point: nobody else wanted to do it, which was why there was a need. The only skill I had that it utilized was a drivers license, and a willingness to help out.

But, techno-optimism bedevils the corporate world just as much. 99% of my job could be done from the comfort of my home office. I can video teleconference, Webex, call, SSH, and pretty much do all of my job functions without ever so much as standing up. But I hop on a southbound train from San Francisco to Palo Alto every morning at 7:19AM like clockwork, and I fly back and forth to DC and New York (as I am doing right now) several times a month. And I do it because, in spite of all the things I can do with just a computer and a wifi connection, nothing important ever gets accomplished unless I'm there in person. You can commit code to the git repo for years on end, but it's not until a person is actually using it that you've accomplished something.

For example. I have occasionally planned trips to client sites not because I needed to get something done there, but because being there, in person, forces the client to make sure I have something to do when I get there. So, I tell them that I'm coming in to configure the networking, and they need to have the servers racked by Wednesday. The truth is that, if they just plug in the Remote Access Console, I can configure the rest of it from Palo Alto, without the need to fly anywhere, or even put my pants on, for that matter. But if I did that, I could spend the next month or more calling, e-mailing, and sending carrier pigeons, for all the good it would do me; the servers would get racked when the IT team had nothing else to do, which is not a condition that has ever prevailed since the invention of the abacus (“Abacus help-desk hours are between 2AM and 4AM on the first new moon of the harvest season. If you require assistance outside of these hours, please consult our rice-paper scroll for self-help and Frequently Asked Questions.”) Is this a huge waste of jet fuel, money, and 3-ounce bottles of shaving cream? Yes. But it's less wasteful than flushing three months of a six month software pilot down the drain while I wait for the servers to get racked. And, while I'm there, I can stop by the analysts' desks and chat with them about their work, their workflow, and what kinds of actual, real-world problems keep them up at night. I can take the clients' project lead out to lunch and let them dish face-to-face about who's trying to block the roll-out, and what kinds of changes we might want to make to turn their opinion around. I can ask for an introduction to the head of a different department or someone who works at a different firm, and see if they're having the same problem, and whether we can try to solve it for them too. And I can hit up my college friends who live in New York and DC, toast heartily to our health, and hear about their latest project, whether or not it has any bearing on my work or not, just to expand my mind a bit.

Software and the internet has changed our world, and in most ways, I think it's been for the greater good. People like to throw digs at Facebook for its triviality, but I landed my current job because I connected with someone on Facebook who turned out to be friends with someone who I had met years before and not seen since. So, we reconnected, and started talking, and when my postdoc was over, he suggested I interview. Not only that, but in the ensuing years, he's become one of my best friends, and was the officiant at my wedding. I can confidently say that my life would be less rich and less happy without Facebook. But the good that Facebook, and JDate, and GMail did for me were that they brought me into contact with people who mattered. The internet has lowered the barriers to human interaction, but it is always the human interaction itself that makes the world a better place. Next time you want to write an app to solve a problem, ask yourself: could I do more by volunteering, or community organizing, or even just reading and learning about this problem, than I could by debugging jQuery? Or am I secretly serving my own interests by doing something I enjoy or learning a new marketable skill, or maybe just avoiding the fact that, for better or for worse, the problems in our world are really, really hard?

Tuesday, August 6, 2013

Something You Will Never Hear in a Spy Movie

"Just run it through one or two databases. No need to go overboard."

Subscribe to:

Posts (Atom)